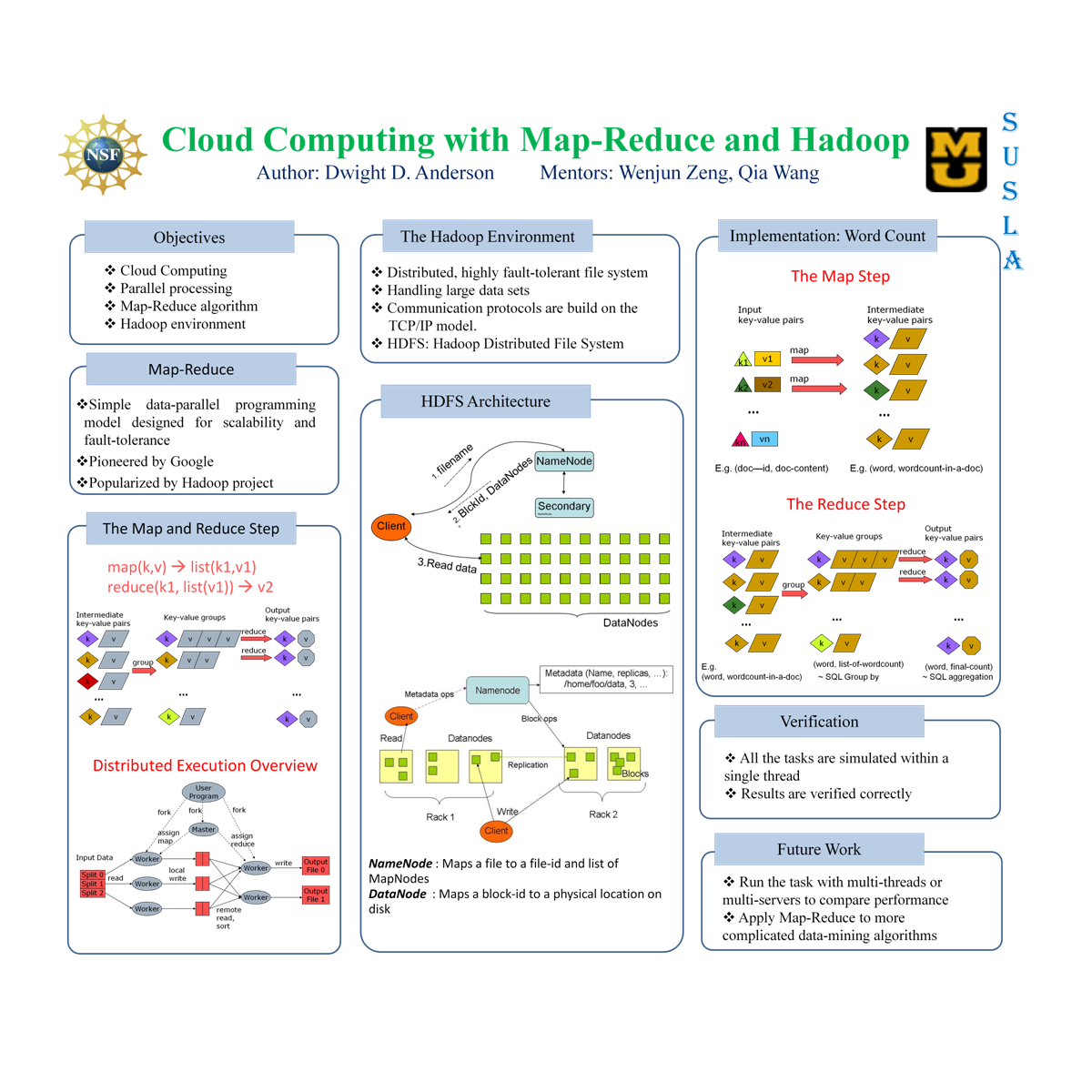

MapReduce primary purpose is to facilitate data parallel processing. It is currently the method implemented by Google’s search engine and Amazon consumer base entity to cultivate their large product and informational requests. As the needs of a growing and evolving civilization changes their information supplements to balance its complexities, of those dataset processes that must adapt with efficiencies in computational methods and application development. We demonstrated such efficiency obtained from parallel processing by implementing a Map Reduce word count algorithm. By increasing the number of processes for a job we got a chance to see basic parallel operational function. While increasing the byte volume gives us insight into the functionality of Hadoop distributive file system and how it handles streaming data. The various integrated functions work on the data streams and generates different output information based on the query request. Simple functionalities were part of this experiment and showed only surface results. The Hadoop can utilize many different algorithms to accommodate the needs of the system. We employed a basic application that demonstrate fundamental mechanisms involved in controlling primitive data types. There are deeper concepts involving analysis of performance improvement using programming methodologies. These programming designs can impact physical components on the commodity computers that make up the clusters.

Funded by a National Science Foundation Research Experience for Undergraduates Grant